RPKI Trust Anchor Malfunctions: A Review of the Last 3.5 Years

The Resource Public Key Infrastructure is a framework designed to secure the Internet’s routing. RPKI provides a way to connect Internet number resource information (such as IP addresses and AS numbers) with digital certificates, establishing a method to verify the legitimacy of route announcements. This helps prevent routing incidents like some types of prefix hijacking, enhancing overall network security.

A Trust Anchor (TA) represents the top-level certification authority (CA) that is inherently trusted. It serves as the starting point for certificate path validation, issuing certificates for its own resources and for lower-level certification authorities. Each Regional Internet Registry (RIR) acts as a TA for its region: AFRINIC for Africa; APNIC for Asia and Oceania; ARIN for North America; LACNIC for Latin America and the Caribbean; and RIPE NCC for Europe, the Middle East, and parts of Central Asia.

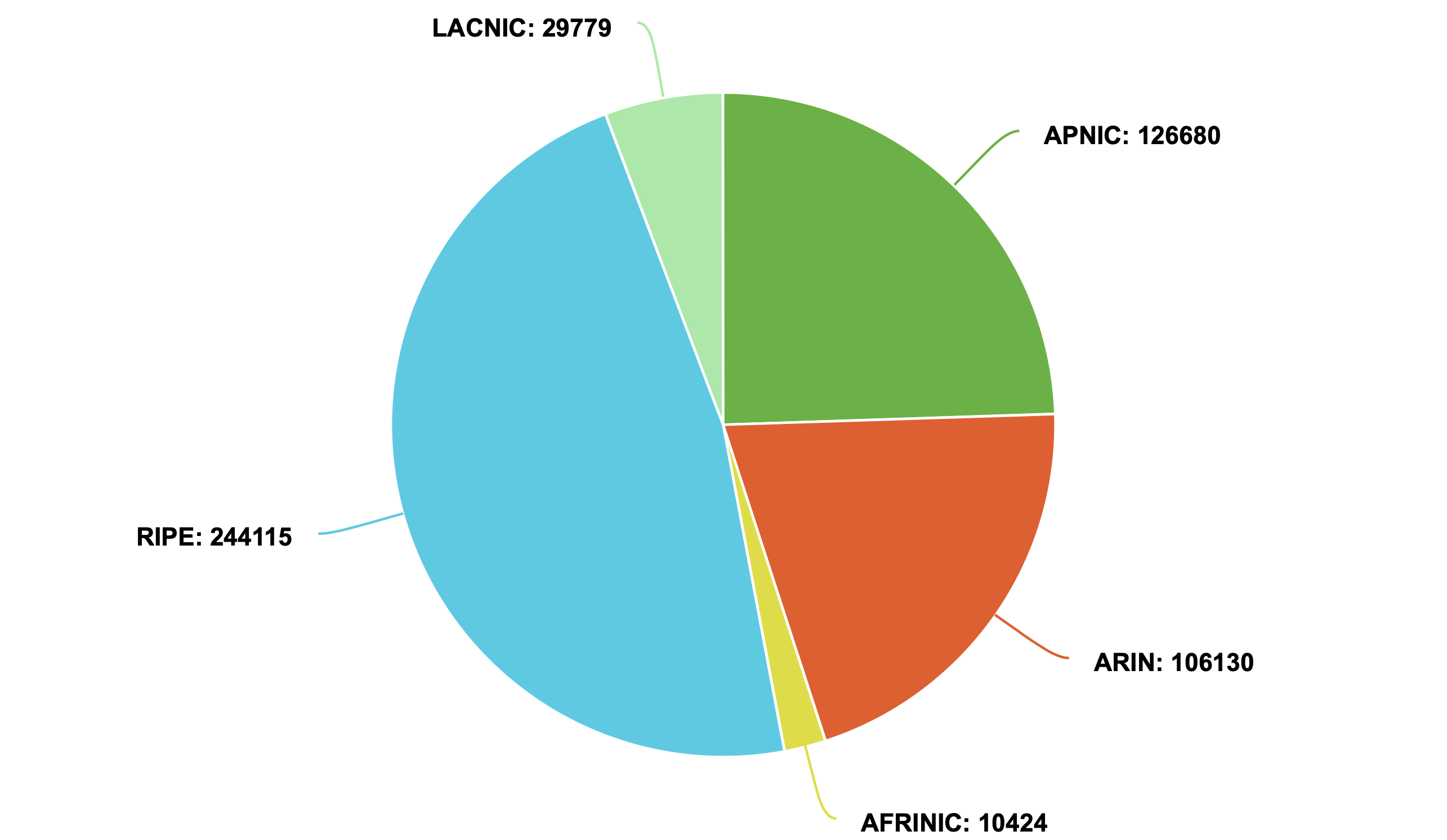

Number or Validated ROA Payloads per Trust Anchor

When a TA experiences issues, traffic usually remains unaffected; however, it can neutralize RPKI’s protective effects for associated Internet resources. Detecting a malfunctioning TA enables operators to understand their current operating conditions and avoid unnecessary troubleshooting for issues outside their control.

3.5 years in numbers

The Global IP Network of NTT is one of the early adopters of RPKI. In a past presentation, I reviewed one year of RPKI operations at NTT and RPKI-related issues. This article provides a more complete exploration of TA malfunctions.

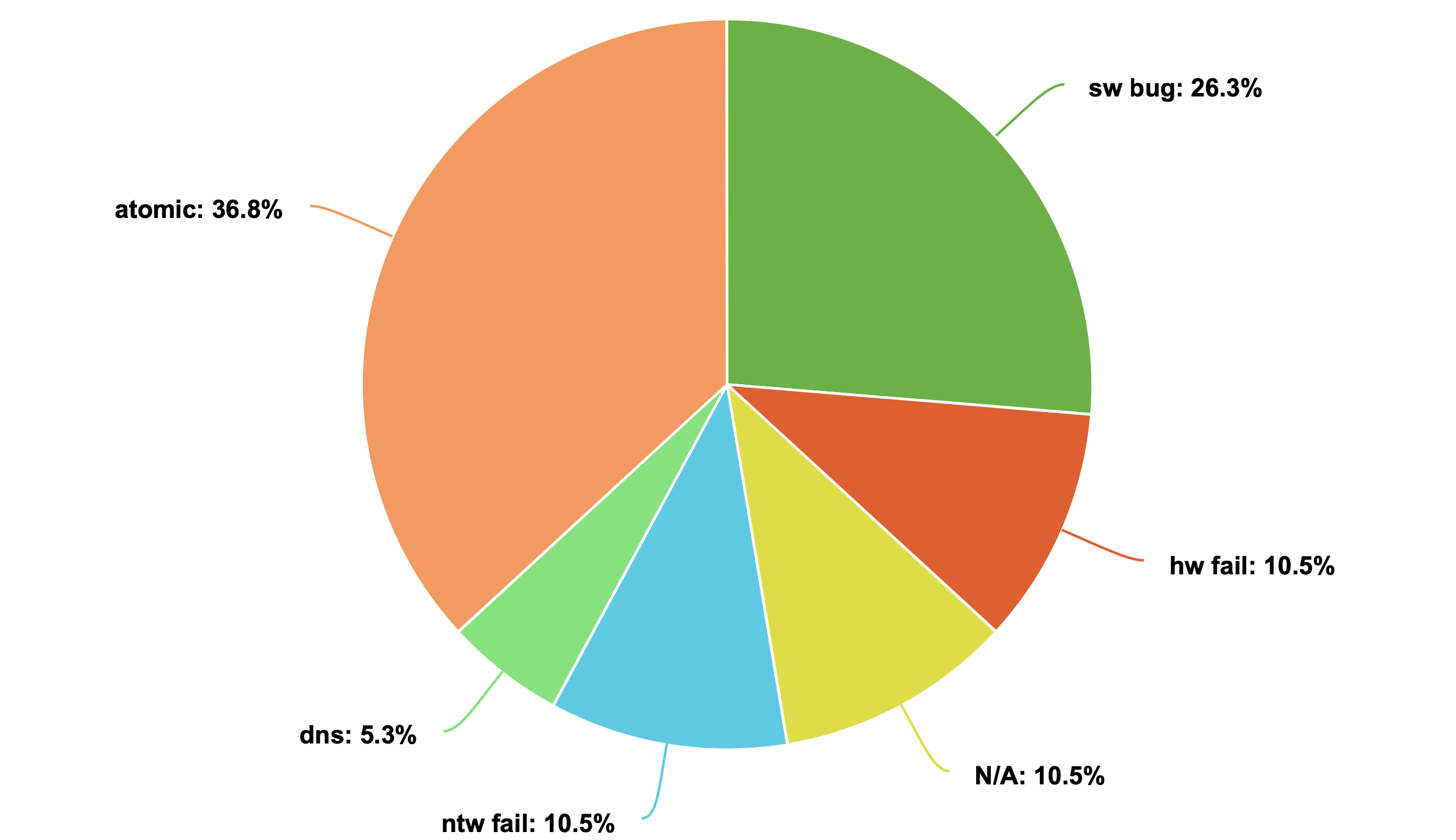

Over the past years, there have been at least 21 TA malfunctions. Not all incidents were accompanied by detailed analyses from the TA operators. However, the causes for most of them have been identified, largely thanks to discussions in mailing lists and community troubleshooting efforts. The image below provides an overview of these causes.

Trust Anchor malfunctions causes and percentage of their occurrence

The majority of TA malfunctions (~37%) stem from a lack of atomicity while operating data in publication points, leading to data inconsistency. A typical scenario involves manifests pointing to non-existent Route Origin Authorizations (ROAs), affecting a significant number of ROAs if the manifest is high up in the trust chain. The second reason is software bugs, which encompass a wide spectrum of incidents that often involve the software used to create manifests or sign objects. Following these are hardware failures, network failures, DNS issues, and 10.5% of cases with unknown causes.

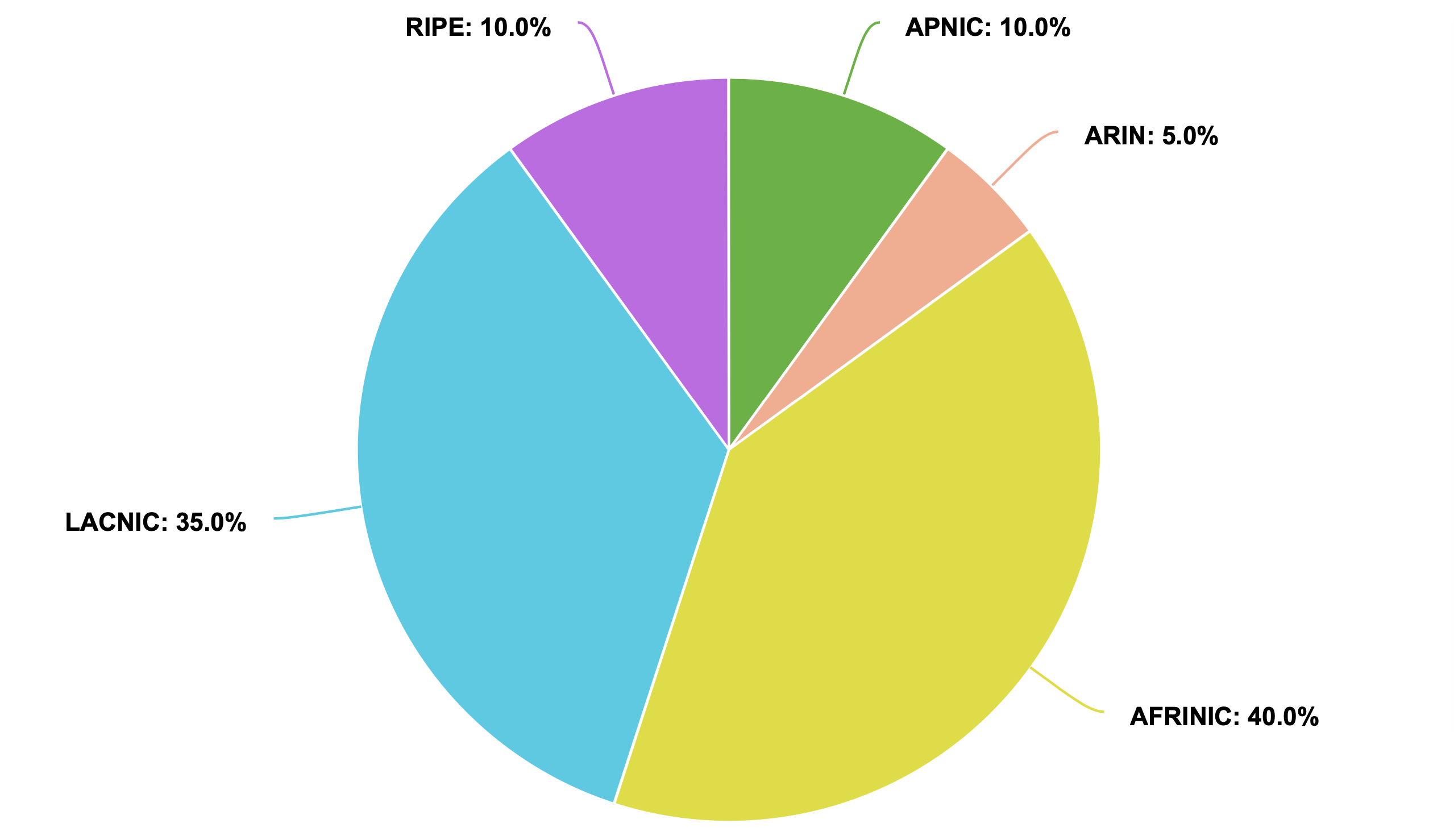

It is important to note that in many cases, TA operators fail to produce technical reports following an incident. If it were not for the initiative of individual members in our community, many of the causes of malfunctions would remain unknown. The image below shows the percentage of malfunctions per TA.

Percentage of malfunctions per Trust Anchor.

AFRINIC and LACNIC account for approximately 80% of the malfunctions, while ARIN appears to be the most stable. It is notable that TAs responsible for a smaller proportion of the total ROAs seem to encounter malfunctions more often.

Note: the compilation of TA malfunctions may not be exhaustive. Additionally, mitigations were activated to silence recurring AFRINIC incidents, which may have reduced the number of recorded AFRINIC malfunctions.

Malfunctions

The following is a list of Trust Anchor malfunctions that have occurred since August 2020. Prior to this date, several other TA malfunctions were reported. However, I have chosen August 12th 2020 as the starting date of this investigation because it marks the first event automatically detected by a monitoring tool for which we have logs (BGPalerter at NTT).

ARIN - August 12, 2020

On August 12, 2020, BGPalerter began reporting to users that several prefixes registered with ARIN were “No longer covered by a ROA”. A collaboration between ARIN and the rpki-client team revealed the root cause: a bug in the new software used to sign objects, introduced after an upgrade of the Hardware Security Module (see report). The issue was resolved, and a data cleanup was scheduled for the following days to correct the ROAs.

TWNIC/APNIC - February 6, 2021

On February 6, 2021, the Taiwan Network Information Center (TWNIC), a CA under APNIC’s TA, experienced a significant malfunction resulting in the disappearance of all ROAs. TWNIC reported a hardware failure as the cause.

RIPE - March 18, 2021

On March 18, 2021, we reported to RIPE NCC staff that ROAs had disappeared in RIPE’s TA. This had occurred in the past, but this time, we were able to provide in the report a detailed analysis that allowed the NCC staff to track down the issue: a manifest referencing certs not in the repository (atomicity issue). RIPE NCC promptly addressed the situation and provided a great example of how incident reports should be made.

LACNIC - June 17, 2021

On June 17, 2021, we detected an issue with LACNIC completely disappearing over rsync. We promptly contacted LACNIC staff, who were able to fix the issue in a short timespan. However, no public report was provided.

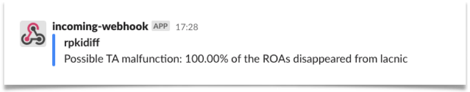

Alert about LACNIC TA malfunction on June 17, 2021

JPNIC - February 1, 2022

On February 1, 2022, a partial TA malfunction was imminent in JPNIC. Several organizations, including NTT, started receiving notifications about a suspiciously large number of ROAs expiring within the next 2 hours. We contacted JPNIC, which managed to address the issue 1 minute before expiration, thereby avoiding a partial, but potentially significant, TA malfunction at the last minute (literally). Later, JPNIC released a public report, indicating that a disk full (due to access logs) prevented the software from updating the ‘nextUpdate’ field in CRLs and manifests.

RIPE - February 16, 2022

On February 16, 2022, rrdp.ripe.net became unreachable. All RPKI validators were forced to fall back to rsync. This caused a cascade issue where rsync was overloaded. The issue was resolved by the RIPE NCC staff, who once again provided a superb report of the incident, highlighting a series of events involving a DNS misconfiguration, two subpar internal monitoring configurations, and an overloaded rsync.

AFRINIC - November 20, 2022

On November 20, 2022, a AFRINIC top-level manifest expired, leading to 73.95% of ROAs disappearing for ~7 hours. See report. The situation was discussed and troubleshooted in the nanog mailing list. We are not aware of any technical reports from AFRINIC.

AFRINIC - January 1, 2023

On January 1st at 03:27 UTC, AFRINIC encountered an issue with a top-level manifest. This time, the manifest was referencing files not present in the repository. This can cause two types of issues: (1) the most obvious one, where the ROAs are not available in the repository, thus they will not appear in the output; and (2) some validators may mark the manifest as corrupted and discard it completely. This incident led to 77.33% of ROAs disappearing in the AFRINIC’s TA. See report.

This event marks the start of a series of similar events that repeat themselves up to now. Among which, some notable are:

-

7 July 2023 - 25.35% of ROAs disappear from AFRINIC. See report.

-

25 August 2023 - 25.60% of ROAs disappear from AFRINIC. See report.

-

24 October 2023 - 22.51% of ROAs disappear from AFRINIC. See report. The issue repeated itself several times in the coming months. An exhaustive report was provided by Job Snijders in the AFRINIC mailing list.

-

18 January 2024 - 50.55% of ROAs disappear from AFRINIC. See report.

It is important to note how this event is influenced by the timing of when validators fetch the repository and by the frequency of updates the repository undergoes. Over time, the frequency of this issue has increased due to the rising number of ROA creations/deletions in the AFRINIC region. More frequent non-atomic operations on the repository create more opportunities for validators to fetch the repository during an inconsistent state. This is further exacerbated by the presence of a few manifests linking to a large number of ROAs. AFRINIC has not yet provided any technical report or fix. Unfortunately, several steps towards reducing noise during operations had to be taken.

AFRINIC - May 14, 2023

On May 14th, 2023, AFRINIC experienced intermittent reachability on RRDP. The issue persisted for more than 10 days and was reported on the AFRINIC mailing list. AFRINIC updated the community in their status page, suggesting the servers were overwhelmed. Although initially marked as resolved on May 17th, 2023, the issue persisted for several more days. While the initial day saw total unreachability over RRDP, subsequent days were characterized by bandwidth problems, with users reporting download speeds as low as 3KB/s on the AFRINIC mailing list. Validators often timed out while fetching, significantly slowing down the entire validation cycle. As a temporary mitigation, RRDP was disabled. You can read why rsync performs better than RRDP on small repositories here.

During the same incident, it was also reported on the mailing list that the AFRINIC repository was unreachable over IPv6. This issue was swiftly rectified on the same day, with IPv6 monitoring subsequently added.

LACNIC - April 15 to June 6, 2023

On April 15, 2023, the LACNIC TA experienced a complete disappearance. See report. The phenomenon occurred intermittently for approximately three hours. An initial investigation suggested that the cause could have been a load balancer malfunction, leading RPKI validators to connect to different backends. These backends falsely presented the same RRDP Session ID but lacked synchronization.

LACNIC experienced other episodes of complete disappearance with similar symptoms:

-

10 May 2023 - All ROAs disappear from LACNIC. See report. The issue persisted, with some validators requiring a reset of the RRDP session to resume functionality. See the LACNOG mailing list for additional information.

-

6 June 2023 - Again total disappearance for about 1 hour. See report. LACNIC staff was promptly informed, and a new RRDP session was established to temporarily mitigate the problem while a proper software patch was ongoing.

The event has not recurred since. I am not aware of any conclusive post-mortem about this incident, which may be connected with the software bug discovered in the following incident.

nic.br/LACNIC - 15 and 16 May, 2023

On May 15 and 16 2023, nic.br (a CA under LACNIC) faced two instances of disappearance attributed to a corrupted root manifest. Being nic.br a large CA, the issue affected 41.37% of LACNIC. See report. This happened concurrently to the recurring TA malfunctions described on the previous point. No report has been provided linking the two cases. Also, this time the incident reports different symptoms: a snapshot.xml referencing multiple times the same certificate, and manifests reporting incorrect certificates hashes. A software bug was identified and a patch was installed on May 31. See complete chain of events in the registro.br mailing list.

LACNIC - January 18, 2024

January 18, 2024 was not a good day for the RPKI ecosystem. In addition to the AFRINIC issue reported a few points above, LACNIC also had a major incident. See report. This time the incident is composed of two acts: a first alert at around 12:20 UTC, indicating the expiration of 100% of the ROAs, was followed by a second alert reporting the disappearance of all ROAs at around 14:38 UTC. According to LACNIC sources, this was caused by a bug in the signing software introduced by a recent update.

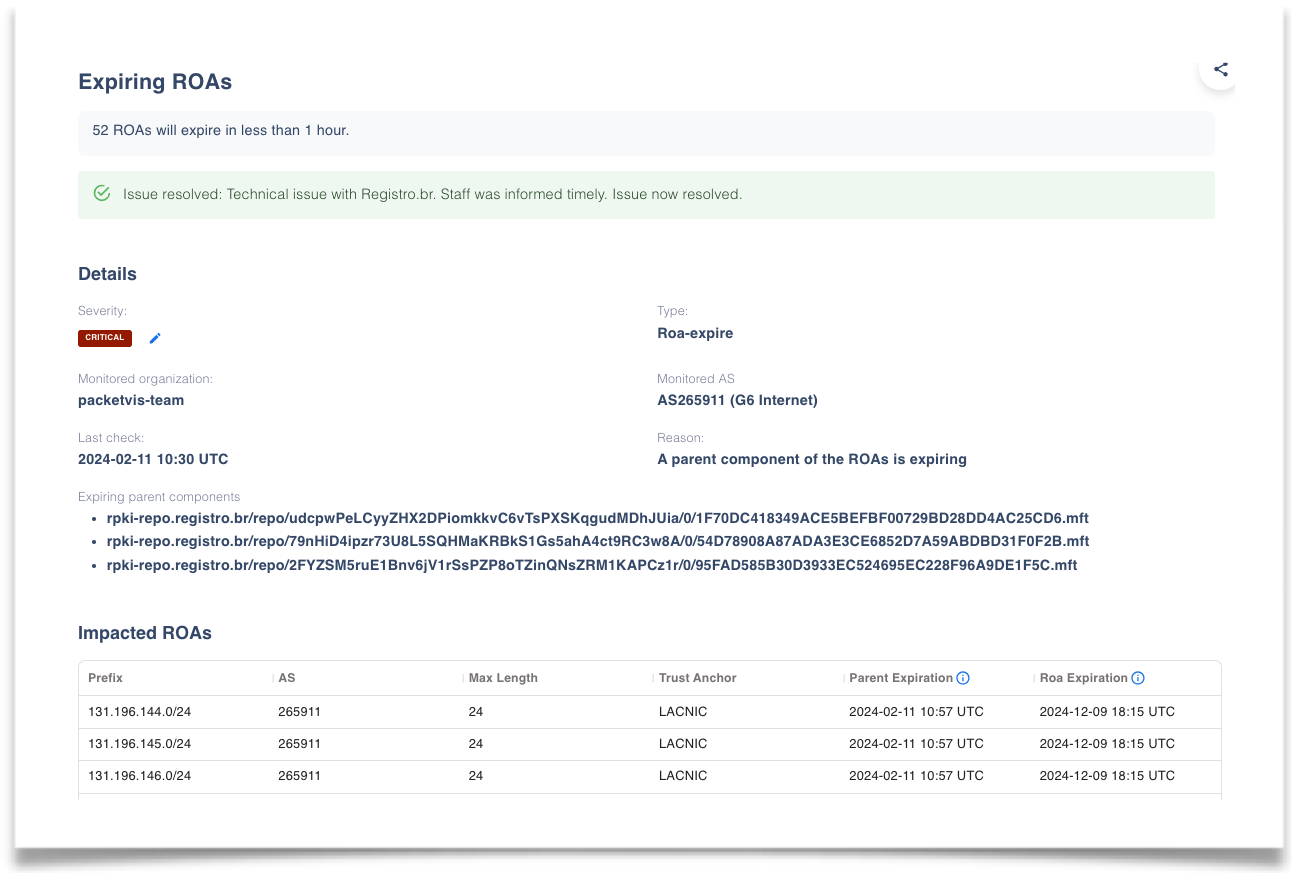

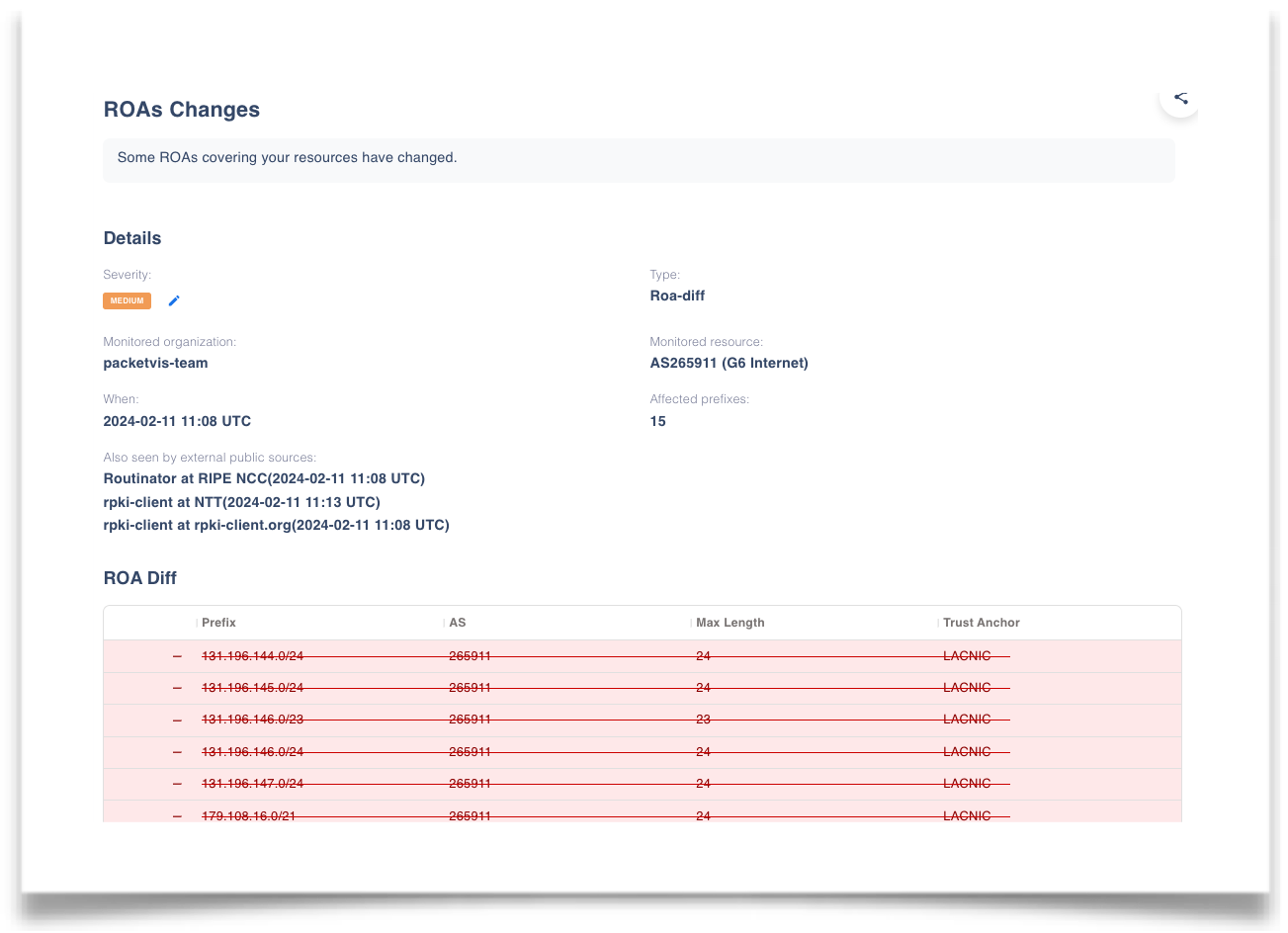

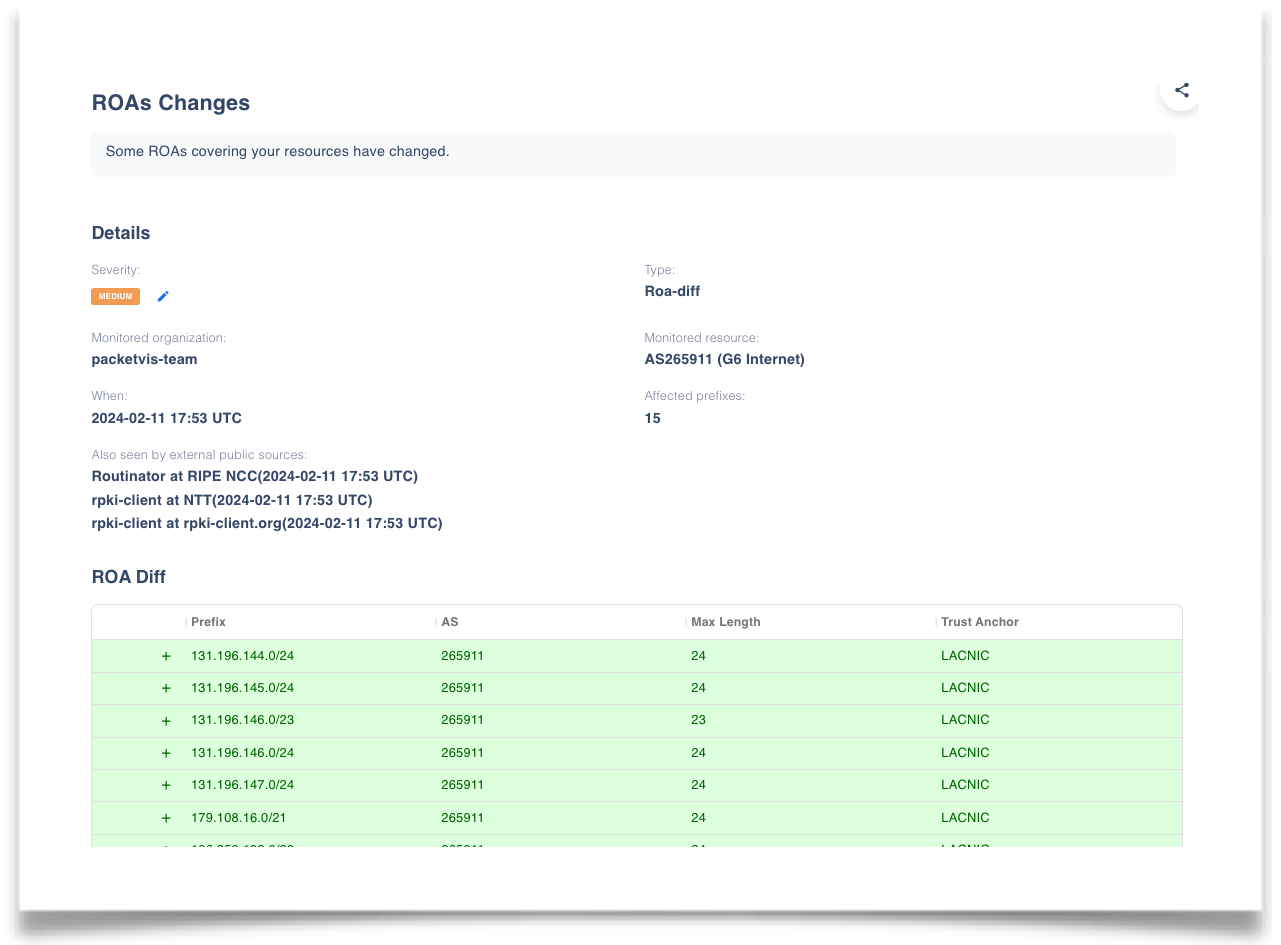

nic.br/LACNIC - February 11, 2024

On February 11, 2024, an issue affected nic.br, where a gap of approximately six hours in manifest renewal led to a considerable number of ROAs being removed from the repository. Initially, users received alerts warning of the imminent expiration of a parent component, directly indicating the specific manifests that were expiring (an example here). Later, when the ROAs expired, users were informed of the missing ROAs. Finally, 6 hours later, users were informed that the ROAs had been restored. The images below depict these three events.

Three subsequent alerts about the nic.br malfunction of February 11, 2024

Conclusions

The inherent complexity of the RPKI architecture occasionally results in Trust Anchor malfunctions. Generally, these are efficiently mitigated by RPKI validators using caching mechanisms, which helps to maintain unaffected traffic flow. Nonetheless, given their important role, Trust Anchors necessitate enhanced focus in three main areas:

- Prompt resolution of bugs is essential. Recurrent issues of the same nature suggest a complacency that is incompatible with security protocols.

- RIRs must thoroughly debrief the community post-incident, providing technical details rather than mere status updates.

- Enhanced monitoring by RIRs is necessary to identify problems before they are flagged by users. Home-grown tools can be paired with tools such as BGPalerter and PacketVis, which have demonstrated their effectiveness over years and have widespread community endorsement.

Acknowledgments

Special recognition is extended to Job Snijders (Fastly) for his dedication to addressing Trust Anchor malfunctions through public mailing lists, and to Carlos Martinez-Cagnazzo (LACNIC) for his efforts in diagnosing several LACNIC issues and promptly resolving some of them.

About the author

Massimo Candela is a Principal Engineer at NTT. In his career, he focused on research and development of applications to guide decisions and to facilitate day-to-day Internet operations. He is contributing to the automation and monitoring of NTT’s Global IP Network.